Circle

In the previous post, a circle is drawn using an explicit formula :

(x, y) = radius . (cos θ, sin θ)

θ ∊ [0, 2π)

In this post, we're going to start with the implicit form :

x² + y² - radius² = 0

Which we then convert into a signed distance :

s(x, y) = x² + y² - radius²

Which can then be normalized by dividing through by the derivative at the zero crossing:

s(x, y) = ( x² + y² - radius² ) / 2.radius

We can visualize that signed distance function by mapping the signed distance directly to intensity :

|

| Signed distance function for a circle |

|

| sinc(x) = sin(x) / x |

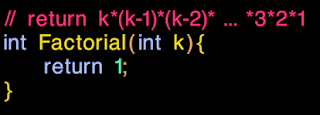

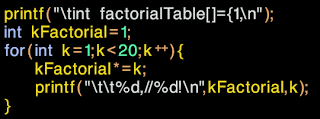

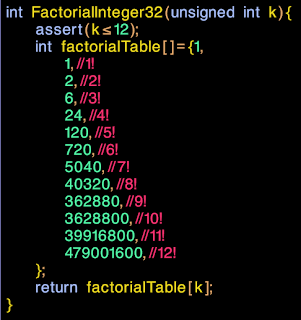

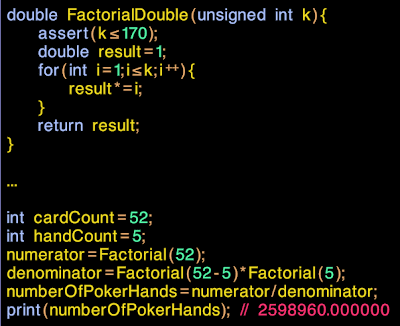

In code, that looks like this :

But can we do better than that? If you recall the Nyquist-Shannon sampling theorem, we can only recover a signal whose frequency is less than half the sample rate. At the moment, we're pushing frequencies at exactly that limit. Some people like the look of that "poppiness" or "crunchiness", but personally, I prefer to back away a little bit from the limit and try to leave at least 20% head room :

float alpha = sinc ( s * Pi * 0.8 );

And what of those negative lobes and moire patterns? Lets chop them off:

float WindowedSinc(float x)

{

if (x <= -3.1415926 ) { return 0; }if (x >= 3.1415926 ) { return 0; }if (x == 0 ) { return 1; }return sin(x) / x;}

One last consideration, we need to consider the transfer function and gamma of the monitor. Luckily, we can approximate this by taking the square root!

return float4( 1, 1, 1, sqrt(alpha) );

Results

On the right is our finished circle, aliased to 80% of the nyquist limit and with approximate gamma correction. For comparison, I've also included the circles from the earlier post on the left.Modern GPU Programming

I've used HLSL and DirectX in this post, but this technique works equally well in OpenGL. With only slight modifications, you can even use this technique on OpenGL ES on the iPad or Android.Hopefully this post has given you a bit of a flavor for some of the considerations in modern GPU programming. If you'd like to know more, or have any suggestions for improvements, please let me know in the comments below!

Appendix - Circle drawing in HLSL

The following three functions are hereby licensed under CC0.

float radius;

float2 center;

float3 strokeColor;

# From http://missingbytes.blogspot.com/2012/05/circles_12.html

float2 center;

float3 strokeColor;

# From http://missingbytes.blogspot.com/2012/05/circles_12.html

float WindowedSinc(float x){

if(x<=-Pi){return 0;}

if(x>=Pi){return 0;}

if(x==0){return 1;}

return sin(x)/x;

}

# From http://missingbytes.blogspot.com/2012/05/circles_12.html

float GammaCorrectAlpha(float3 color,float alpha){

float GammaCorrectAlpha(float3 color,float alpha){

float a=1-sqrt(1-alpha);

float b=sqrt(alpha);

float t=(color.x+color.y+color.z)/3;

return a*(1-t)+b*t;

}

float4 CirclePixelShader(in float2 screenPosition:TEXCOORD):COLOR{

float xx=uv.x-screenPosition.x;

float yy=uv.y-screenPosition.y;

float s=(xx*xx+yy*yy-radius*radius)/2/radius;

float alpha=WindowedSinc(s*Pi*0.8);

float alphaScreen=GammaCorrectAlpha(strokeColor,alpha);

return float4(strokeColor.xyz,alphaScreen);

}